Projects

We have ongoing and completed projects from diverse areas. Students from Bogazici University Cognitive Science MS program and Education Technology programs conduct experiments with participants. Students from the Computer Science Department work on creating various virtual environments for these purposes or machine learning applications.

Ongoing Projects

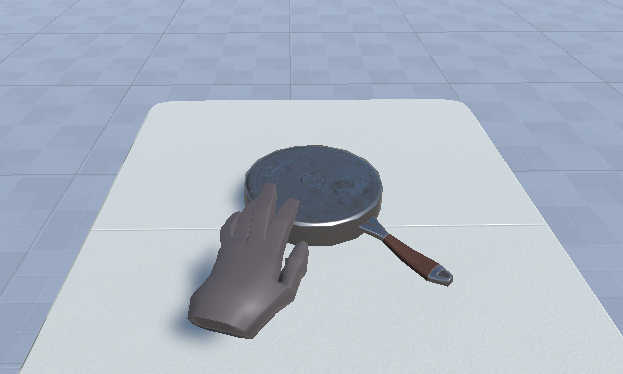

The Effect of Affordances on Perceived Action Durations

The project investigates how affordance perception may affect perceived interval durations during motor action, contributing to the nascent research linking affordances to the interval timing.In this study, we utilize two avatar hand types: normal and pill-shaped (fingerless). We are investigating whether the perceived time passed during motor action (reaching for a pan) differs depending on the hand type (normal, allowing for pan-handle grasping affordance; capsule, no grasping ability afforded) in our participants. LeapMotion hand tracker used with HTC Vive headset.

Glacier Boat

This project provides an artic sea environment where the player navigates a boat around. The surroundings are icebergs that melt, glaciers and some animated animals present in the exploratory environment. The player can interact with the virtual environment by driving the boat and picking up some objects floating. The project is designed to be used complementary with “This is Climate Change: Melting Ice” 360° VR documentary where Former Vice President Al Gore takes viewers on a transcendent exploration into the devastating consequences of our changing climate.

Forest Experience: Explore and Recycle

An immersive realistic forest environment where the player moves and explores around. The surroundings contain interesting points and animals. Users can manipulate trash objects and recycle the items into plastic, metal, glass, and paper containers. They receive voice-based positive and negative feedback according to these actions. The environment also includes an optional tutorial for first-time users to learn basic mechanics.

Audiovisual Saliency Cues in VR

Research aims to examine how contextual cues affect audiovisual integration processes when determining saliency. Various 360 panoramic videos with ambisonic audio are used with HTC Vive VR headset and eye tracking data is analyzed for different types of videos. Variables include sound type (human, animal, nature, vehicle, music) and scene type (indoors, outdoors-natural, outdoors-human-made).

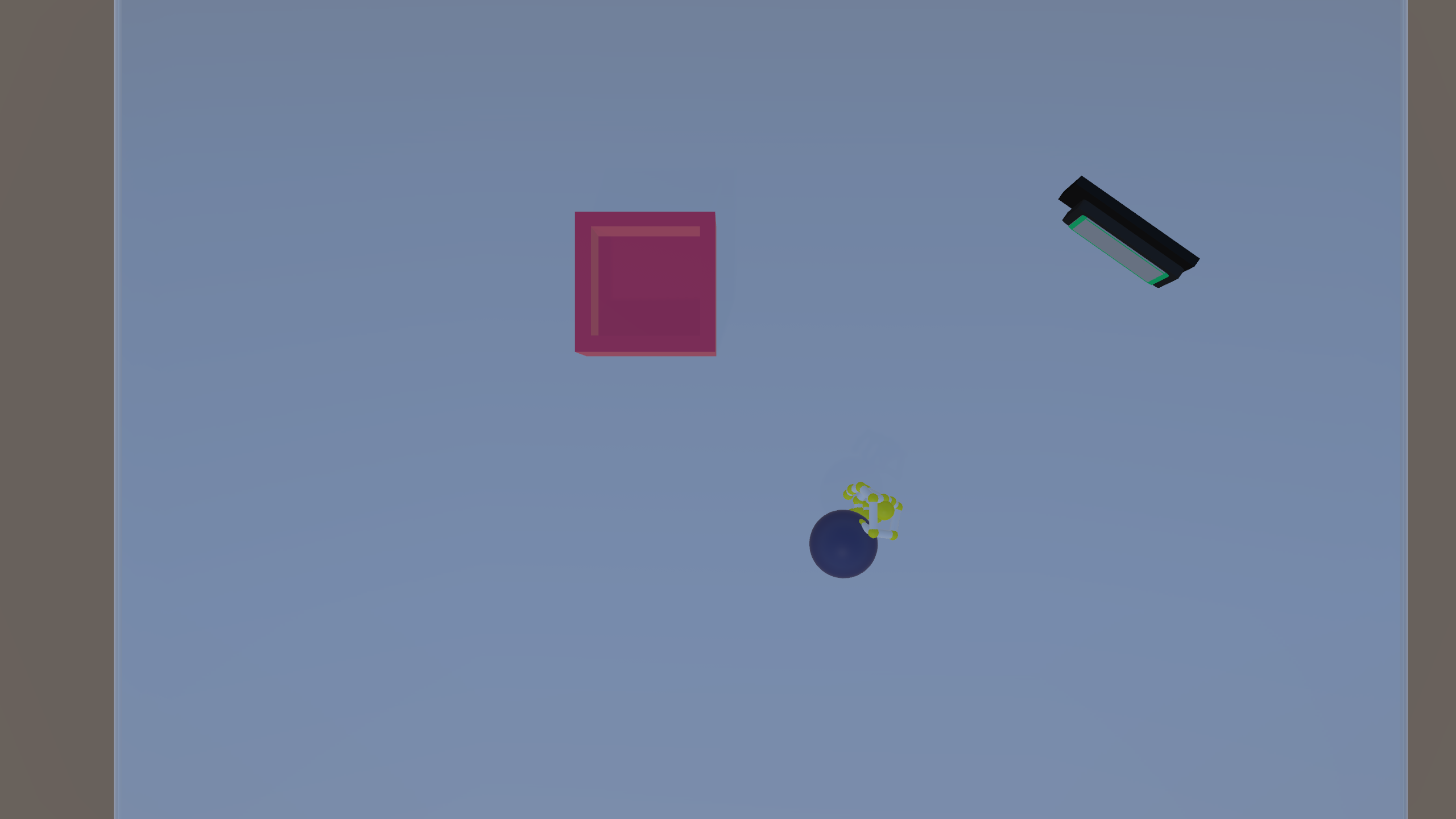

Abstract Judgement and Lifelong Learning with Symbol and Rule Discovery

The project is built upon a scientific article. It aims to provide data to the ML algorithm in that article for which normally raw data is provided to train. The task is basically to build a tower with certain height by putting objects on top of eachother given in the environment. The project uses HTC Vive Pro with the integration of Ultraleap hand tracker. The environment has friction, collusion and gravity as in natural settings.

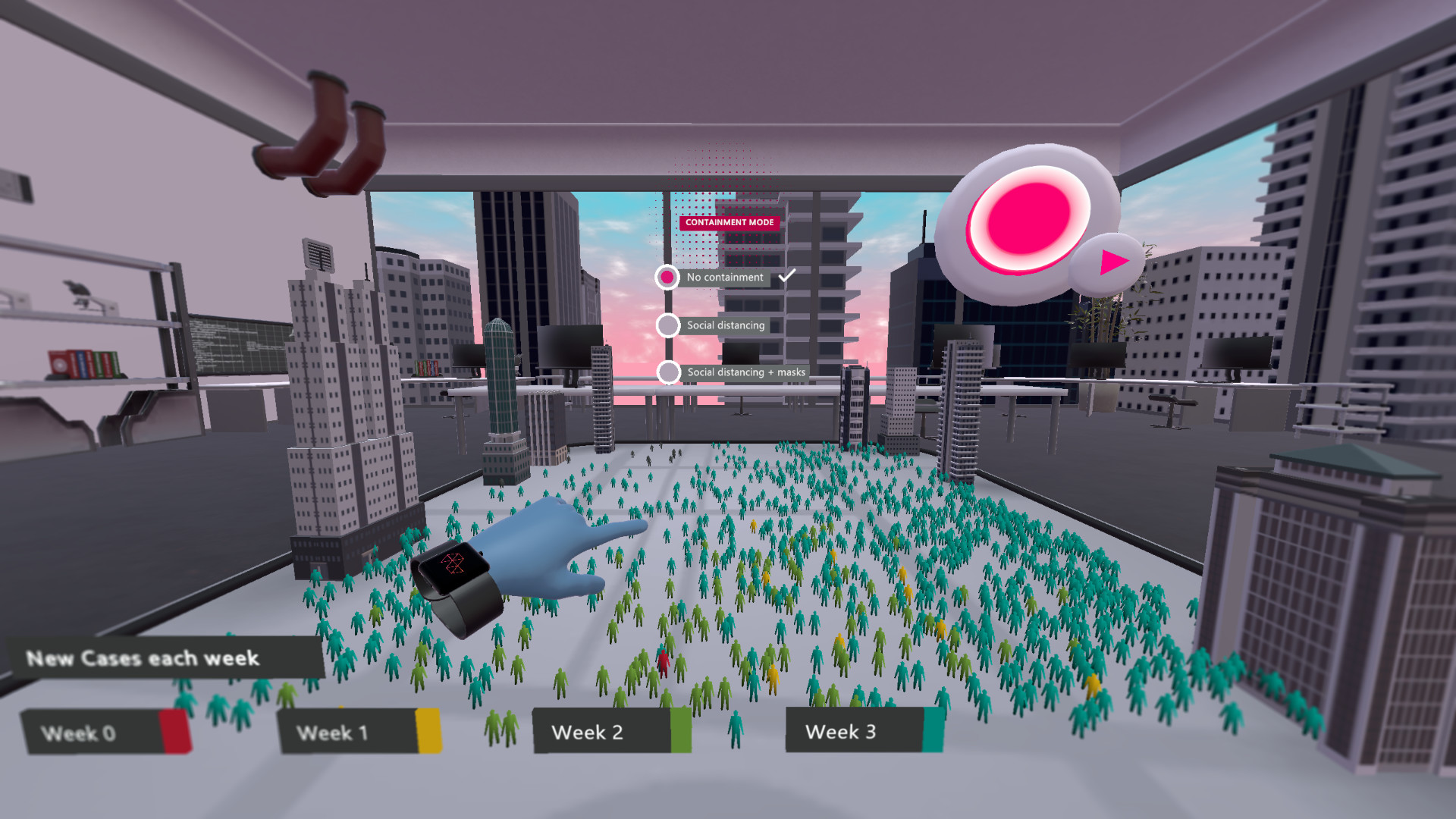

Studying Preservice Teachers’ SRL via VR Technologies

This study aimed to explore preservice teachers’ self-regulated learning as they engaged in immersive VR applications and to study the links between teachers’ use of SRL in their own learning as measured by multimodal data. This particular study has been conducted as part of an international study titled “STEM Teachers’ Capacity to Teach Self-Regulated Learning: Effectiveness of Extended Reality” (https://earli.org/efg) and certain decisions regarding data collection were taken together with the international collaborators.

Completed Projects

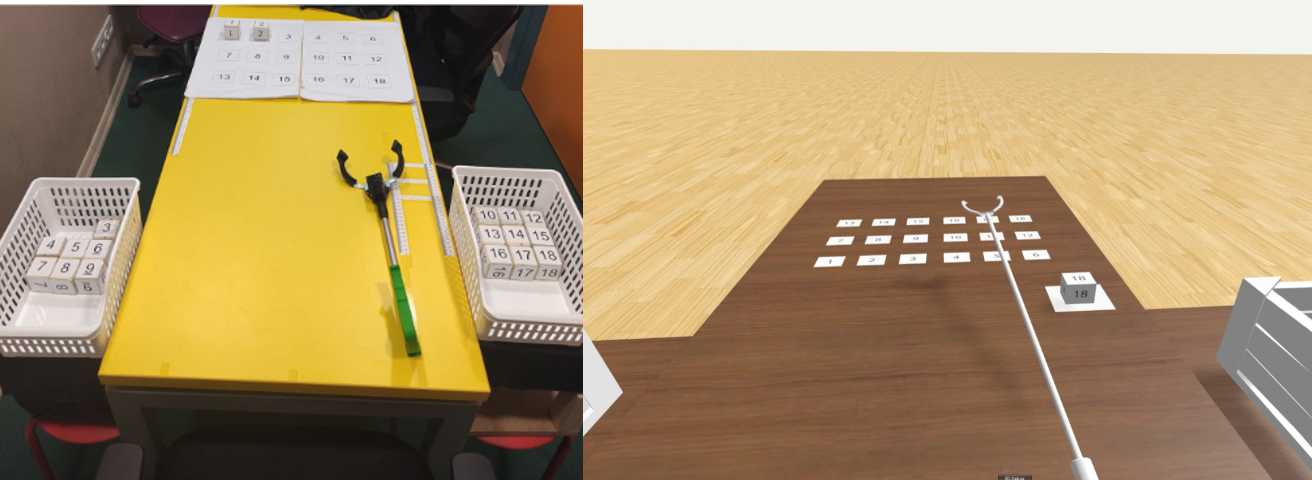

Body Schema During Tool-Use via VR Technologies

This study focuses on the comparison of how tool-use experiments affect body representation in a virtual environment versus a physical environment. It aims to evaluate the tool use paradigm in these two different experimental environments and to compare the degree of adaptation to tool use of people in these two environments by using forearm bisection measurement.

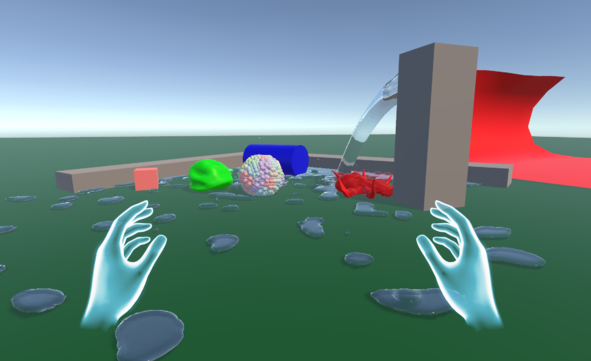

Real-Time Fluid, Cloth, SoftBodies Physics Simulation in VR

The project aims to introduce realistic physics simulation into virtual reality. The focus is on enhancing the interacting experience with fluids, clothes, soft and deformable objects. New mechanics will include: tearing, friction, adhesion, buoyancy, melting, surface tension, viscosity, plasticity, and wind. The objective is achieved through the use of particle simulation.